GenHelm

Last month I released the first commercial version of GenHelm, a software product that was 30 years in the making. Read on if you are interested in learning how a product, first conceived in the 1990's, could take so long to be realised.

My story begins in 1985 when I was recently hired by a software company intent on building packaged financial applications (General Ledger, Accounts Payable, Accounts Receivable, etc.). Building packaged software, to be used by many different companies, is much more difficult than building software for one specific client. In the case of financial applications, every company has a unique chart-of-accounts that is specific to their business. For example, a company that makes pet products might want their account structure to reflect various divisions (say, dog division, cat division, etc.) and various products within each division (leashes, food products, toys, etc.). This way they can track and consolidate expenses and revenue to determine margins on various product lines, et cetera.

The typical means by which packaged applications handle different requirements across their customer base is by using configuration files. Packages that cater to a wide variety of users end up needing dozens or even hundreds of config files. Often, much of the internal complexity of such systems is associated with the handling of "client differences" (configuration logic) rather than solving actual business problems.

To circumvent the need to implement such complex configuration handling, we decided to take a different approach. Rather than building one common application that uses config files to determine how to behave at runtime, we built generators that could be fed the specifics of each new customer and use this to generate a financial application that is specific to that customer. The advantage of this approach is that each customer would essentially get a custom application that caters to their specific requirements and the application would not be cluttered with all kinds of tangential logic needed to deal with every possible combination of requirements (across all companies).

Our initial approach was to have a separate generator for each component that required customisation. As we cranked out more and more generators, we realised that many of the generators looked alike. For example, the generator to create an account maintenance program was very similar to the generator to create a user maintenance program. At this point we decided to take a step back and have fewer generators by making each one more generic. Before long, we realised that these generators could be used to generate not only financial applications but also components for many common types of applications.

At this point, we shifted our focus from developing financial applications to developing generation technology. I was put in charge of this project and before long a new product, named Construct, was born. I led the Construct development team for 13 years and I was the main architect and developer of the product at that time. During this period, Construct evolved from a technology that could build stand-alone applications running exclusively on the mainframe to one that could build 3-Tier web applications having Visual Basic serving web pages derived from information obtained by calling mainframe services written in Natural. Over 30 years later, there are still many companies using Construct, much of it running the exact same code I wrote back in the 1990's.

Although Construct was a very powerful product for its time, it had limitations that could not be easily overcome. For example Natural, the programming language used to implement Construct, was a procedural language without support for object orientation. When developing Construct, code generation became my passion and I had a lot of ideas for what would make a Utopian generation environment based on object-oriented principles.

In 2004 I co-founded a web consulting firm. The basic premise behind this company was that we would leverage development resources on a contractual basis. That is, rather than hiring a pool of developers and hoping we could bring in enough work to pay for them, we would first find the work and then engage contractors to implement the projects as needed. At the time, it seemed like a good idea since it would allow for infinite scale and we could contract developers who had experience in whatever type of application our customers wanted us to build.

This approach worked well for three or four projects but then when our customers asked us to make changes and enhancements to these early projects we did not always have access to the developers who built the software. Each one was built using different developers employing different technology and standards. We quickly realised that it would be impossible for us to support the very applications "we" developed if we continued along this strategy.

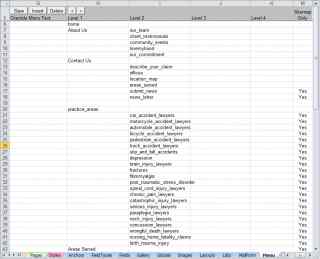

The premise of having a scalable team of developers was very sound but what needed to change was the strategy around the technology and methodology used to implement our web projects. If all developers used common tools and components and followed the same development approach, any developer could easily perform maintenance on applications, even if they were not involved in creating them. With my background in Construct, I felt strongly that our development tools should be heavily based on generation technology. This is the point where I embarked on building GenHelm Version .01. This tool, code named PHPXL, allowed web developers to describe the website they wanted to build using nothing but an Excel spreadsheet. Each tab in the spreadsheet represented a "model". A model is basically a class used to generate a certain type of component. The spreadsheet used Excel macros to generate PHP code according to what the developer entered. For example, this screen shows how a site's menu could be configured in PHPXL:

Despite being rather unorthodox, PHPXL worked very well and was used to build and maintain several hundred websites, many of which are still in production to this day. Nevertheless, this approach suffered from three main problems:

To overcome these key issues, along with dozens of more minor issues, I embarked on building what would eventually become GenHelm.

Initially, the plan was to build a tool that was fully backward compatible with PHPXL so that we could easily transition PHPXL sites into GenHelm. Instead of using Excel to capture the specifications for the website, this would be done using a web browser. Eventually, efforts to maintain backward compatibility got to be too constrictive so I decided to abandon this constraint. Nevertheless, this early objective was very fruitful because I was able to use PHPXL to build the initial components of GenHelm, then I could gradually flip these components into GenHelm, itself, once the required model was available. Building a full blown web development environment using itself is very challenging but also extremely beneficial. Since the IDE runs on the identical framework used by all generated sites, this framework is continuously being exercised during the development process. Usually, the minute you introduce a bug in the framework you will know about it since you will likely be calling this very same component to handle some aspect of the IDE.

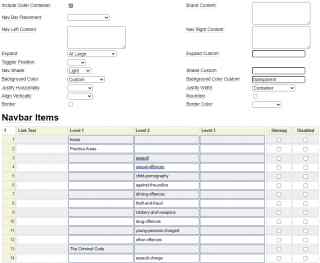

Below is a screen shot of the "menu" model in GenHelm. You can see that it is loosely based on what was originally used in PHPXL but it has been extended to include many featured needed to handle modern responsive websites.

One of the more interesting models in GenHelm is named the "model" model. It is so named because this model is used to generate the 70 or so model that ship with GenHelm. One of the most interesting aspects of this model is that it actually generates itself, not unlike a surgeon performing their own heart transplant.

I have been using the familiar term IDE (Integrated Development Environment) to describe GenHelm. In actual fact, a more descriptive acronym would be IGE (Integrated Generation Environment). That's because every component of the websites developed in GenHelm is generated to some degree.

If you have been developing code for more that a few years you have probably started working on a component when you realise that it is very similar to something you developed in the past. At this point you might go back and try to find the class that you worked on before and use this to get a head start on your new component. Cloning programs in this way may improve your productivity to some extent but it is often fraught with peril. Here are some of the pitfalls of this strategy:

With GenHelm, the development methodology involves creating models (generators) for those programs that tend to be needed from one website to the next. Essentially, a generator is performing the "clone" operation in a much more controlled and sophisticated way. This is done at the specification level, not the code level. The actual code is incidental and never needs to be maintained by hand. The beauty of this approach is that it becomes very easy to update the code to take advantage of new features and capabilities. For example, if you had built all of your models to generate HTML 4 code, it would be a fairly simple exercise to adapt them to generate HTML 5. In so doing, all of the websites that were built using earlier versions of the models could be regenerated and instantly modernised.

A model can generate any number of components but most models just generate one or two files. This number can vary based on the parameters entered into the model. For example, when using the "styles" model you can elect to generate only an unminified stylesheet, only a minified stylesheet or both. The model takes care of deleting components that are no longer applicable to the current parameter selection so you don't end up with unused baggage in your sites.

One of the main reasons that it took so long to develop GenHelm is that modern web systems include a large number complex components such as photo galleries, blogs, carousels, tabbed controls, responsive grids, maps, videos, etc. I knew that if developers tried to use GenHelm and found that they could not build sophisticated websites, it would fall flat.

Developers tend to distrust generators, much like the scepticism surrounding driverless cars. People inherently believe that they can do certain things better than computers or machines. Although this is generally not the case, I knew that if developers discovered bugs in the generated code they would not embrace the methodology promoted by GenHelm.

Another aspect that took a long time was the productization of GenHelm. It's one thing to build a product for one customer or for a specific requirement, it is quite another to build a generic tool that can be used by thousands of companies. If you follow the Agile development methodology you may be familiar with the "MVP" which stands for Minimum Viable Product. This promotes releasing something with minimum functionality to get it in the hands of the customer in order to get early feedback and allow the customer to shape the direction of the product. This sounds great in theory but the problem is that having customers drastically slows down the development process because of the need for backward compatibility. This is one of the main reasons that products in the field don't tend to evolve very fast and why I was far more concerned with having a high quality, feature-rich, bug-free product than I was with getting the product to market by a certain date.

In closing, I am looking forward to working with customers who will embrace the prospect of developing websites in a way they may not be accustomed and helping them reap the benefits of doing do. My team and I have been developing websites in GenHelm for the past three years so I am extremely confident in its capabilities and robustness.